Datasets:

first commit

Browse files- README.md +208 -45

- download_videos.py +39 -0

- egoexo4d_uids.json +100 -0

- lookup_action_to_split.json +20 -0

- n_differences.json +42 -0

- videos_fitness.zip +3 -0

- videos_surgery.zip +3 -0

README.md

CHANGED

|

@@ -1,45 +1,208 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

|

| 30 |

-

|

| 31 |

-

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

-

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

|

| 45 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Dataset card for "VidDiffBench"

|

| 2 |

+

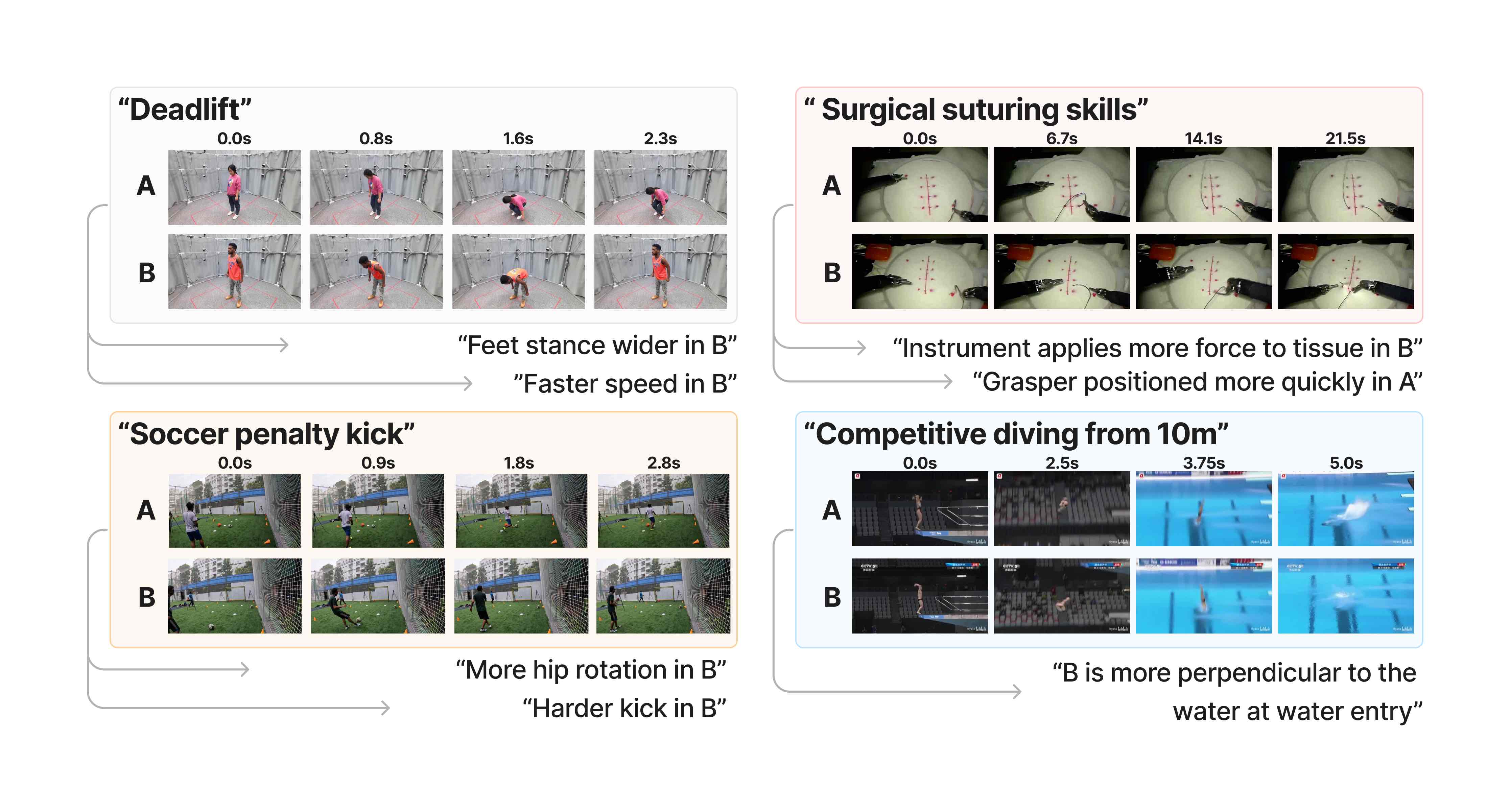

This is the dataset / benchmark for [Video Action Differencing](https://openreview.net/forum?id=3bcN6xlO6f) (ICLR 2025), a new task that compares how an action is performed between two videos. This page introduces the task, the dataset structure, and how to access the data. See the paper for details on dataset construction. The code for running evaluation, and for benchmarking popular LMMs is at [https://jmhb0.github.io/viddiff](https://jmhb0.github.io/viddiff).

|

| 3 |

+

|

| 4 |

+

```

|

| 5 |

+

@inproceedings{burgessvideo,

|

| 6 |

+

title={Video Action Differencing},

|

| 7 |

+

author={Burgess, James and Wang, Xiaohan and Zhang, Yuhui and Rau, Anita and Lozano, Alejandro and Dunlap, Lisa and Darrell, Trevor and Yeung-Levy, Serena},

|

| 8 |

+

booktitle={The Thirteenth International Conference on Learning Representations}

|

| 9 |

+

}

|

| 10 |

+

```

|

| 11 |

+

|

| 12 |

+

# The Video Action Differencing task: closed and open evaluation

|

| 13 |

+

The Video Action Differencing task compares two videos of the same action. The goal is to identify differences in how the action is performed, where the differences are expressed in natural language.

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

In closed evaluation:

|

| 18 |

+

- Input: two videos of the same action, action description string, a list of candidate difference strings.

|

| 19 |

+

- Output: for each difference string, either 'a' if the statement applies more to video a, or 'b' if it applies more to video 'b'.

|

| 20 |

+

|

| 21 |

+

In open evaluation, the model must generate the difference strings:

|

| 22 |

+

- Input: two videos of the same action, action description string, a number 'n_differences'.

|

| 23 |

+

- Output: a list of difference strings (at most 'n_differences'). For each difference string, 'a' if the statement applies more to video a, or 'b' if it applies more to video 'b'.

|

| 24 |

+

|

| 25 |

+

<!--

|

| 26 |

+

Some more details on these evaluation modes. See the paper for more discussion:

|

| 27 |

+

- In closed eval, we only provide difference strings where the gt label is 'a' or 'b'; if the gt label is 'c' meaning "not different", it's skipped. This is because different annotators (or models) may have different calibration: a different judgement of "how different is different enough".

|

| 28 |

+

- In open evaluation, the model is allowed to predict at most `n_differences`, which we set to be 1.5x the number of differences we included in our annotation taxonomy. This is because there may be valid differences not in our annotation set, and models should not be penalized for that. But a limit is required to prevent cheating by enumerating too many possible differences.

|

| 29 |

+

|

| 30 |

+

The eval scripts are at [https://jmhb0.github.io/viddiff](https://jmhb0.github.io/viddiff).

|

| 31 |

+

-->

|

| 32 |

+

|

| 33 |

+

# Dataset structure

|

| 34 |

+

After following the 'getting the data' section: we have `dataset` as a HuggingFace dataset and `videos` as a list. For row `i`: video A is `videos[0][i]`, video B is `videos[1][i]`, and `dataset[i]` is the annotation for the difference between the videos.

|

| 35 |

+

|

| 36 |

+

The videos:

|

| 37 |

+

- `videos[0][i]['video']` and is a numpy array with shape `(nframes,H,W,3)`.

|

| 38 |

+

- `videos[0][i]['fps_original']` is an int, frames per second.

|

| 39 |

+

|

| 40 |

+

The annotations in `dataset`:

|

| 41 |

+

- `sample_key` a unique key.

|

| 42 |

+

- `videos` metadata about the videos A and B used by the dataloader: the video filename, and the start and end frames.

|

| 43 |

+

- `action` action key like "fitness_2"

|

| 44 |

+

- `action_name` a short action name, like "deadlift"

|

| 45 |

+

- `action_description` a longer action description, like "a single free weight deadlift without any weight"

|

| 46 |

+

- `source_dataset` the source dataset for the videos (but not annotation), e.g. 'humman' [here](https://caizhongang.com/projects/HuMMan/).

|

| 47 |

+

- `split` difficulty split, one of `{'easy', 'medium', 'hard'}`

|

| 48 |

+

- `n_differences_open_prediction` in open evaluation, the max number of difference strings the model is allowed to generate.

|

| 49 |

+

- `differences_annotated` a dict with the difference strings, e.g:

|

| 50 |

+

```

|

| 51 |

+

{

|

| 52 |

+

"0": {

|

| 53 |

+

"description": "the feet stance is wider",

|

| 54 |

+

"name": "feet stance wider",

|

| 55 |

+

"num_frames": "1",

|

| 56 |

+

},

|

| 57 |

+

"1": {

|

| 58 |

+

"description": "the speed of hip rotation is faster",

|

| 59 |

+

"name": "speed",

|

| 60 |

+

"num_frames": "gt_1",

|

| 61 |

+

},

|

| 62 |

+

"2" : null,

|

| 63 |

+

...

|

| 64 |

+

```

|

| 65 |

+

- and these keys are:

|

| 66 |

+

- the key is the 'key_difference'

|

| 67 |

+

- `description` is the 'difference string' (passed as input in closed eval, or the model must generate a semantically similar string in open eval).

|

| 68 |

+

- `num_frames` (not used) is '1' if an LMM could solve it from a single (well-chosen) frame, or 'gt_1' if more frames are needed.

|

| 69 |

+

- Some values might be `null`. This is because the Huggingface datasets enforces that all elements in a column have the same schema.

|

| 70 |

+

- `differences_gt` has the gt label, e.g. `{"0": "b", "1":"a", "2":null}`. For example, difference "the feet stance is wider" applies more to video B.

|

| 71 |

+

- `domain` activity domain. One of `{'fitness', 'ballsports', 'diving', 'surgery', 'music'}`.

|

| 72 |

+

|

| 73 |

+

# Getting the data

|

| 74 |

+

Getting the dataset requires a few steps. We distribute the annotations, but since we don't own the videos, you'll have to download them elsewhere.

|

| 75 |

+

|

| 76 |

+

**Get the annotations**

|

| 77 |

+

|

| 78 |

+

First, get the annotations from the hub like this:

|

| 79 |

+

```

|

| 80 |

+

from datasets import load_dataset

|

| 81 |

+

repo_name = "viddiff/VidDiffBench"

|

| 82 |

+

dataset = load_dataset(repo_name)

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

**Get the videos**

|

| 86 |

+

|

| 87 |

+

We get videos from prior works (which should be cited if you use the benchmark - see the end of this doc).

|

| 88 |

+

The source dataset is in the dataset column `source_dataset`.

|

| 89 |

+

|

| 90 |

+

First, download some `.py` files from this repo into your local `data/` file.

|

| 91 |

+

```

|

| 92 |

+

GIT_LFS_SKIP_SMUDGE=1 git clone [email protected]:datasets/viddiff/VidDiffBench data/

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

A few datasets let us redistribute videos, so you can download them from this HF repo like this:

|

| 96 |

+

```

|

| 97 |

+

python data/download_data.py

|

| 98 |

+

```

|

| 99 |

+

|

| 100 |

+

If you ONLY need the 'easy' split, you can stop here. The videos includes the source datasets [Humann](https://caizhongang.com/projects/HuMMan/) (and 'easy' only draws from this data) and [JIGSAWS](https://cirl.lcsr.jhu.edu/research/hmm/datasets/jigsaws_release/).

|

| 101 |

+

|

| 102 |

+

For 'medium' and 'hard' splits, you'll need to download these other datasets from the EgoExo4D and FineDiving. Here's how to do that:

|

| 103 |

+

|

| 104 |

+

*Download EgoExo4d videos*

|

| 105 |

+

|

| 106 |

+

These are needed for 'medium' and 'hard' splits. First Request an access key from the [docs](https://docs.ego-exo4d-data.org/getting-started/) (it takes 48hrs). Then follow the instructions to install the CLI download tool `egoexo`. We only need a small number of these videos, so get the uids list from `data/egoexo4d_uids.json` and use `egoexo` to download:

|

| 107 |

+

```

|

| 108 |

+

uids=$(jq -r '.[]' data/egoexo4d_uids.json | tr '\n' ' ' | sed 's/ $//')

|

| 109 |

+

egoexo -o data/src_EgoExo4D --parts downscaled_takes/448 --uids $uids

|

| 110 |

+

|

| 111 |

+

```

|

| 112 |

+

Common issue: remember to put your access key into `~/.aws/credentials`.

|

| 113 |

+

|

| 114 |

+

*Download FineDiving videos*

|

| 115 |

+

|

| 116 |

+

These are needed for 'medium' split. Follow the instructions in [the repo](https://github.com/xujinglin/FineDiving) to request access (it takes at least a day), download the whole thing, and set up a link to it:

|

| 117 |

+

```

|

| 118 |

+

ln -s <path_to_fitnediving> data/src_FineDiving

|

| 119 |

+

```

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

**Making the final dataset with videos**

|

| 123 |

+

|

| 124 |

+

Install these packages:

|

| 125 |

+

```

|

| 126 |

+

pip install numpy Pillow datasets decord lmdb tqdm huggingface_hub

|

| 127 |

+

```

|

| 128 |

+

Now run:

|

| 129 |

+

```

|

| 130 |

+

from data.load_dataset import load_dataset, load_all_videos

|

| 131 |

+

dataset = load_dataset(splits=['easy'], subset_mode="0") # splits are one of {'easy','medium','hard'}

|

| 132 |

+

videos = load_all_videos(dataset, cache=True, cache_dir="cache/cache_data")

|

| 133 |

+

```

|

| 134 |

+

For row `i`: video A is `videos[0][i]`, video B is `videos[1][i]`, and `dataset[i]` is the annotation for the difference between the videos. For video A, the video itself is `videos[0][i]['video']` and is a numpy array with shape `(nframes,3,H,W)`; the fps is in `videos[0][i]['fps_original']`.

|

| 135 |

+

|

| 136 |

+

By passing the argument `cache=True` to `load_all_videos`, we create a cache directory at `cache/cache_data/`, and save copies of the videos using numpy memmap (total directory size for the whole dataset is 55Gb). Loading the videos and caching will take a few minutes per split (faster for the 'easy' split), and about 25mins for the whole dataset. But on subsequent runs, it should be fast - a few seconds for the whole dataset.

|

| 137 |

+

|

| 138 |

+

Finally, you can get just subsets, for example setting `subset_mode='3_per_action'` will take 3 video pairs per action, while `subset_mode="0"` gets them all.

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

# More dataset info

|

| 142 |

+

We have more dataset metadata in this dataset repo:

|

| 143 |

+

- Differences taxonomy `data/difference_taxonomy.csv`.

|

| 144 |

+

- Actions and descriptions `data/actions.csv`.

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

# License

|

| 148 |

+

|

| 149 |

+

The annotations and all other non-video metadata is realeased under an MIT license.

|

| 150 |

+

|

| 151 |

+

The videos retain the license of the original dataset creators, and the source dataset is given in dataset column `source_dataset`.

|

| 152 |

+

- EgoExo4D, license is online at [this link](https://ego4d-data.org/pdfs/Ego-Exo4D-Model-License.pdf)

|

| 153 |

+

- JIGSAWS release notes at [this link](https://cirl.lcsr.jhu.edu/research/hmm/datasets/jigsaws_release/ )

|

| 154 |

+

- Humman uses "S-Lab License 1.0" at [this link](https://caizhongang.com/projects/HuMMan/license.txt)

|

| 155 |

+

- FineDiving use [this MIT license](https://github.com/xujinglin/FineDiving/blob/main/LICENSE)

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

# Citation

|

| 159 |

+

Below is the citation for our paper, and the original source datasets:

|

| 160 |

+

```

|

| 161 |

+

@inproceedings{burgessvideo,

|

| 162 |

+

title={Video Action Differencing},

|

| 163 |

+

author={Burgess, James and Wang, Xiaohan and Zhang, Yuhui and Rau, Anita and Lozano, Alejandro and Dunlap, Lisa and Darrell, Trevor and Yeung-Levy, Serena},

|

| 164 |

+

booktitle={The Thirteenth International Conference on Learning Representations}

|

| 165 |

+

}

|

| 166 |

+

|

| 167 |

+

|

| 168 |

+

@inproceedings{cai2022humman,

|

| 169 |

+

title={{HuMMan}: Multi-modal 4d human dataset for versatile sensing and modeling},

|

| 170 |

+

author={Cai, Zhongang and Ren, Daxuan and Zeng, Ailing and Lin, Zhengyu and Yu, Tao and Wang, Wenjia and Fan,

|

| 171 |

+

Xiangyu and Gao, Yang and Yu, Yifan and Pan, Liang and Hong, Fangzhou and Zhang, Mingyuan and

|

| 172 |

+

Loy, Chen Change and Yang, Lei and Liu, Ziwei},

|

| 173 |

+

booktitle={17th European Conference on Computer Vision, Tel Aviv, Israel, October 23--27, 2022,

|

| 174 |

+

Proceedings, Part VII},

|

| 175 |

+

pages={557--577},

|

| 176 |

+

year={2022},

|

| 177 |

+

organization={Springer}

|

| 178 |

+

}

|

| 179 |

+

|

| 180 |

+

@inproceedings{parmar2022domain,

|

| 181 |

+

title={Domain Knowledge-Informed Self-supervised Representations for Workout Form Assessment},

|

| 182 |

+

author={Parmar, Paritosh and Gharat, Amol and Rhodin, Helge},

|

| 183 |

+

booktitle={Computer Vision--ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23--27, 2022, Proceedings, Part XXXVIII},

|

| 184 |

+

pages={105--123},

|

| 185 |

+

year={2022},

|

| 186 |

+

organization={Springer}

|

| 187 |

+

}

|

| 188 |

+

|

| 189 |

+

@inproceedings{grauman2024ego,

|

| 190 |

+

title={Ego-exo4d: Understanding skilled human activity from first-and third-person perspectives},

|

| 191 |

+

author={Grauman, Kristen and Westbury, Andrew and Torresani, Lorenzo and Kitani, Kris and Malik, Jitendra and Afouras, Triantafyllos and Ashutosh, Kumar and Baiyya, Vijay and Bansal, Siddhant and Boote, Bikram and others},

|

| 192 |

+

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

|

| 193 |

+

pages={19383--19400},

|

| 194 |

+

year={2024}

|

| 195 |

+

}

|

| 196 |

+

|

| 197 |

+

@inproceedings{gao2014jhu,

|

| 198 |

+

title={Jhu-isi gesture and skill assessment working set (jigsaws): A surgical activity dataset for human motion modeling},

|

| 199 |

+

author={Gao, Yixin and Vedula, S Swaroop and Reiley, Carol E and Ahmidi, Narges and Varadarajan, Balakrishnan and Lin, Henry C and Tao, Lingling and Zappella, Luca and B{\'e}jar, Benjam{\i}n and Yuh, David D and others},

|

| 200 |

+

booktitle={MICCAI workshop: M2cai},

|

| 201 |

+

volume={3},

|

| 202 |

+

number={2014},

|

| 203 |

+

pages={3},

|

| 204 |

+

year={2014}

|

| 205 |

+

}

|

| 206 |

+

```

|

| 207 |

+

|

| 208 |

+

|

download_videos.py

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from huggingface_hub import hf_hub_download

|

| 2 |

+

import zipfile

|

| 3 |

+

import os

|

| 4 |

+

|

| 5 |

+

def download_and_extract_videos(dataset_name, output_dir, split):

|

| 6 |

+

# Create output directory if it doesn't exist

|

| 7 |

+

os.makedirs(output_dir, exist_ok=True)

|

| 8 |

+

|

| 9 |

+

# Download the zip file

|

| 10 |

+

# print(f"Downloading {zip_filename} from the dataset...")

|

| 11 |

+

zip_path = hf_hub_download(

|

| 12 |

+

repo_id=dataset_name,

|

| 13 |

+

filename=f"data/videos_{split}.zip",

|

| 14 |

+

repo_type="dataset",

|

| 15 |

+

local_dir=output_dir,

|

| 16 |

+

)

|

| 17 |

+

|

| 18 |

+

# Extract the zip file

|

| 19 |

+

with zipfile.ZipFile(zip_path, 'r') as zip_ref:

|

| 20 |

+

for file_info in zip_ref.infolist():

|

| 21 |

+

extracted_path = os.path.join(output_dir, file_info.filename)

|

| 22 |

+

if file_info.filename.endswith('/'):

|

| 23 |

+

os.makedirs(extracted_path, exist_ok=True)

|

| 24 |

+

else:

|

| 25 |

+

os.makedirs(os.path.dirname(extracted_path), exist_ok=True)

|

| 26 |

+

with zip_ref.open(file_info) as source, open(extracted_path, "wb") as target:

|

| 27 |

+

target.write(source.read())

|

| 28 |

+

|

| 29 |

+

print(f"Videos extracted to {output_dir}")

|

| 30 |

+

|

| 31 |

+

# Optional: Remove the downloaded zip file

|

| 32 |

+

# os.remove(zip_path)

|

| 33 |

+

|

| 34 |

+

# Usage

|

| 35 |

+

dataset_name = "viddiff/VidDiffBench"

|

| 36 |

+

output_dir = "."

|

| 37 |

+

splits = ['ballsports', 'music', 'diving', 'fitness', 'surgery']

|

| 38 |

+

for split in splits:

|

| 39 |

+

download_and_extract_videos(dataset_name, output_dir, split)

|

egoexo4d_uids.json

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[

|

| 2 |

+

"5d25cc61-f04d-47e3-9f0e-dbbf7707f0a0",

|

| 3 |

+

"181b7c2f-4854-4d1c-83e0-dbc7d7c92df3",

|

| 4 |

+

"7722005b-d53c-4dbe-a8c9-60f0f50deeb8",

|

| 5 |

+

"a3214a2b-be14-44fe-b4b4-791beb788b77",

|

| 6 |

+

"63156ba0-fabc-49e2-bb93-a8a0c5028ad0",

|

| 7 |

+

"989ffb65-7466-49fd-94cb-6d3d4d528a8b",

|

| 8 |

+

"b5f8232e-5686-43ba-8e7a-ffeadb232444",

|

| 9 |

+

"354f076e-079f-440d-bd38-97ddfcd19002",

|

| 10 |

+

"96816348-c93b-4b46-b4e9-2f53d96f9f88",

|

| 11 |

+

"b64755de-fc1c-4e36-a862-04e4c34a2dfa",

|

| 12 |

+

"30206a66-d533-43cd-a1b3-cd092c47925c",

|

| 13 |

+

"c01e068d-c018-4a07-9a64-e7004fca335c",

|

| 14 |

+

"2a5d2d00-f7cf-4228-ad3b-553d303e069f",

|

| 15 |

+

"9357c6f2-9c17-4259-a4bd-7a715e7331e4",

|

| 16 |

+

"207ae687-de62-4b01-b26f-fb66fd167884",

|

| 17 |

+

"85593061-50be-45f1-9078-d489822abc92",

|

| 18 |

+

"020ae1ca-02ad-49c8-9056-87ad6bdcb8bd",

|

| 19 |

+

"6d01e433-1ada-4363-afa8-7a2bf780ac80",

|

| 20 |

+

"7d83d5fb-1039-444c-83fb-bcc2f4a7dcf2",

|

| 21 |

+

"ff29aa81-555c-4eb2-a79f-10e27e7f09c7",

|

| 22 |

+

"609123eb-8e83-41f9-9cd5-524fc01f3ea9",

|

| 23 |

+

"95250e21-8238-43e5-b11b-95f978ae81ed",

|

| 24 |

+

"024a7ee5-df4d-4dd0-a890-b13adc8ed431",

|

| 25 |

+

"705d49e6-20f4-4c32-ae7d-dfa50abfe7fc",

|

| 26 |

+

"d7b4b35f-715d-40bd-a163-03ec0ab6638f",

|

| 27 |

+

"7f691664-7655-4971-9c0a-75da85810879",

|

| 28 |

+

"aaa7e120-2224-4a5b-96ef-4a4b054a6ccd",

|

| 29 |

+

"0a178901-e191-41e6-8df3-474106a5c938",

|

| 30 |

+

"909679ff-1c53-496c-abfa-fd1bab12d325",

|

| 31 |

+

"6abf4872-3bc1-4e63-8fb6-90f796505e31",

|

| 32 |

+

"3ac49447-8437-4e1a-b772-06f4c79afe96",

|

| 33 |

+

"bee03368-c65a-4b24-a572-5e799a8baa2c",

|

| 34 |

+

"55a996a2-cdf3-4976-b475-a7c4c6a008a8",

|

| 35 |

+

"b018b84a-9999-485f-8e0f-fb6c4cb2fcf4",

|

| 36 |

+

"34d092b6-7e39-4048-af8b-ada7c44bb59a",

|

| 37 |

+

"96abbf92-97cf-406b-910d-59d139c9da79",

|

| 38 |

+

"dc3e63c1-5b14-46f3-8230-5c93249e9469",

|

| 39 |

+

"513841ed-662b-43a0-b670-98feb66a8d83",

|

| 40 |

+

"943815f7-ea36-44a9-ade4-3407a0992481",

|

| 41 |

+

"9fe91f54-5d6c-43ba-aed0-cd8b1aae5d98",

|

| 42 |

+

"c97bfa47-1ca4-40f6-a463-b4f25c8b127d",

|

| 43 |

+

"e58755eb-18e3-4cb3-b52a-2bb31de7e275",

|

| 44 |

+

"d7b30d31-1614-4089-a26a-7e9557265ab4",

|

| 45 |

+

"932df016-3216-4809-87d8-05ec1b0106a9",

|

| 46 |

+

"f4312ec8-75ab-4ab9-9172-9558b6bd963b",

|

| 47 |

+

"0b7cd6fd-7b71-4ccd-89ba-892e2c0f5597",

|

| 48 |

+

"5cb6025b-6cd8-4a31-9611-02f5d7260ace",

|

| 49 |

+

"5a6d246e-6d6a-41af-b57b-580eba638ceb",

|

| 50 |

+

"eb38a264-e494-4a9c-9115-f55ea6754e39",

|

| 51 |

+

"8c2dcf73-5b6c-48ae-87d2-95bea5cb7b72",

|

| 52 |

+

"77d985cb-eff9-43ca-b5e2-e2d1433d0d4b",

|

| 53 |

+

"41e21155-f00c-4b03-a5ce-905e435f0f92",

|

| 54 |

+

"114984e0-4de8-4db7-afc8-0c66926f65f4",

|

| 55 |

+

"783ea71f-5f82-41da-986f-b69025680e27",

|

| 56 |

+

"b016b093-27d8-4507-84d3-1905141bd715",

|

| 57 |

+

"9e2bd99e-80b6-433d-82d0-0fc18efc34ab",

|

| 58 |

+

"92899354-8715-4525-9449-1b1d23ecd057",

|

| 59 |

+

"f1b08b01-3476-40cb-89c8-85860d59e1f6",

|

| 60 |

+

"90e697a5-2cff-4ecc-a580-f4c230a5488c",

|

| 61 |

+

"46deacb7-2d19-4b18-b440-170f9ea7f28a",

|

| 62 |

+

"2e3ac19b-c10a-4fc1-a812-ef2cf0956fb9",

|

| 63 |

+

"236fc37a-0b3a-493d-9bc8-8b1ff434c213",

|

| 64 |

+

"65268148-70eb-419c-b8cc-eddfbe9576dd",

|

| 65 |

+

"9ebe4ac3-1472-4094-9e73-6014e78e0539",

|

| 66 |

+

"2bc8abe8-e4d1-46e2-8aa0-134e30674456",

|

| 67 |

+

"21f82af2-ac7d-4839-ba61-2adf7fe55964",

|

| 68 |

+

"4cad2ed5-6270-4899-9fbf-b006390df277",

|

| 69 |

+

"976e486c-0292-4ee1-bed4-05a3e5b0d6cd",

|

| 70 |

+

"2ac272cd-787e-4f36-9ca7-94339c15a992",

|

| 71 |

+

"f291d174-596d-471f-836b-993315197824",

|

| 72 |

+

"cac56c11-1de1-476f-a05d-f43c0cf99893",

|

| 73 |

+

"a1b56b36-68f6-4238-b5bf-a830dc8758bf",

|

| 74 |

+

"853cdf8c-e669-40f3-95bf-1ed7cd2bbb75",

|

| 75 |

+

"2fbd4068-86e0-4893-b9c6-10c88fa8275c",

|

| 76 |

+

"b74169ba-77b0-447d-aeff-cb7934ff6711",

|

| 77 |

+

"caa16834-c43b-44a0-b251-9b465533df5e",

|

| 78 |

+

"b863ecd5-c282-478c-8e7b-748b30aff1b1",

|

| 79 |

+

"a5b0f86c-5449-4c10-8366-e9d74662faf3",

|

| 80 |

+

"69440a67-bb0d-40e9-87f4-a77b2bffbe78",

|

| 81 |

+

"8a0ea233-beeb-42cf-84b8-d25bda4ad967",

|

| 82 |

+

"ede28f52-3ab0-4da8-808e-33ba5a1fae28",

|

| 83 |

+

"e4cb7425-0cb6-437b-8718-110f5da24e53",

|

| 84 |

+

"cb4a441d-0dae-4bec-90e3-1b9dab39e269",

|

| 85 |

+

"d6f00914-84db-4654-bdb6-2fd74dca4265",

|

| 86 |

+

"eb83782e-63ca-40f9-874d-a933fd60e714",

|

| 87 |

+

"15280378-3659-4d3d-b6b7-fa243709c89c",

|

| 88 |

+

"444075c4-aed8-4c06-81cf-d05df0bcb207",

|

| 89 |

+

"83783976-189a-460f-9068-7ad5b17dd815",

|

| 90 |

+

"4c3ee253-c4a5-4d56-b990-600b132e0b43",

|

| 91 |

+

"51c1db43-a355-4d50-a9eb-18d1248e09ee",

|

| 92 |

+

"0669b09c-fda3-4fbb-9c41-b9b1fd3ff31e",

|

| 93 |

+

"4cbe9bb5-597a-446d-8613-952fd82dfc13",

|

| 94 |

+

"f78ad5b9-8960-493e-8c8a-ce19a53c12f7",

|

| 95 |

+

"fff0f0a0-d5f1-4533-879c-8928f76b8278",

|

| 96 |

+

"0bf37b5f-11fb-4788-8b6b-1c0277d585ca",

|

| 97 |

+

"a8d04142-fc0b-4ad4-acaa-8c17424411ff",

|

| 98 |

+

"066cccd7-d7ca-4ce3-a80e-90ce9013c1ab",

|

| 99 |

+

"f7117001-1764-446a-a57f-cbb12b1a92bd"

|

| 100 |

+

]

|

lookup_action_to_split.json

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"diving_0": "medium",

|

| 3 |

+

"fitness_0": "easy",

|

| 4 |

+

"fitness_1": "medium",

|

| 5 |

+

"fitness_2": "medium",

|

| 6 |

+

"fitness_3": "easy",

|

| 7 |

+

"fitness_4": "easy",

|

| 8 |

+

"fitness_5": "medium",

|

| 9 |

+

"fitness_6": "easy",

|

| 10 |

+

"fitness_7": "medium",

|

| 11 |

+

"surgery_0": "hard",

|

| 12 |

+

"surgery_1": "hard",

|

| 13 |

+

"surgery_2": "hard",

|

| 14 |

+

"ballsports_0": "medium",

|

| 15 |

+

"ballsports_2": "medium",

|

| 16 |

+

"ballsports_1": "medium",

|

| 17 |

+

"ballsports_3": "medium",

|

| 18 |

+

"music_0": "hard",

|

| 19 |

+

"music_1": "hard"

|

| 20 |

+

}

|

n_differences.json

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"music": {

|

| 3 |

+

"music_0": 10,

|

| 4 |

+

"music_1": 10

|

| 5 |

+

},

|

| 6 |

+

"diving": {

|

| 7 |

+

"diving_0": 10

|

| 8 |

+

},

|

| 9 |

+

"easy": {

|

| 10 |

+

"easy_0": 5,

|

| 11 |

+

"easy_1": 5,

|

| 12 |

+

"easy_2": 5,

|

| 13 |

+

"easy_3": 5,

|

| 14 |

+

"easy_4": 5

|

| 15 |

+

},

|

| 16 |

+

"fitness": {

|

| 17 |

+

"fitness_0": 10,

|

| 18 |

+

"fitness_1": 16,

|

| 19 |

+

"fitness_2": 15,

|

| 20 |

+

"fitness_3": 9,

|

| 21 |

+

"fitness_4": 5,

|

| 22 |

+

"fitness_5": 6,

|

| 23 |

+

"fitness_6": 10,

|

| 24 |

+

"fitness_7": 16

|

| 25 |

+

},

|

| 26 |

+

"surgery": {

|

| 27 |

+

"surgery_0": 13,

|

| 28 |

+

"surgery_1": 16,

|

| 29 |

+

"surgery_2": 12

|

| 30 |

+

},

|

| 31 |

+

"demo": {

|

| 32 |

+

"demo_0": 5,

|

| 33 |

+

"demo_1": 5,

|

| 34 |

+

"demo_2": 5

|

| 35 |

+

},

|

| 36 |

+

"ballsports": {

|

| 37 |

+

"ballsports_0": 12,

|

| 38 |

+

"ballsports_1": 24,

|

| 39 |

+

"ballsports_2": 16,

|

| 40 |

+

"ballsports_3": 9

|

| 41 |

+

}

|

| 42 |

+

}

|

videos_fitness.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:248496186897846d3c1249ca87a13619f91217509f9b72e54f734d955dd34619

|

| 3 |

+

size 4700405421

|

videos_surgery.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3ebf18faeed38356666a16107519f2e600894af5cef9c77985a6ffb4846b4018

|

| 3 |

+

size 166835540

|