Spaces:

Running

How to run 🐳 DeepSite locally

Hi everyone 👋

Some of you have asked me how to use DeepSite locally. It's actually super easy!

Thanks to Inference Providers, you'll be able to switch between different providers just like in the online application. The cost should also be very low (a few cents at most).

Run DeepSite locally

- Clone the repo using git

git clone https://huggingface.co/spaces/enzostvs/deepsite

- Install the dependencies (make sure node is installed on your machine)

npm install

Create your

.envfile and add theHF_TOKENvariable

Make sure to create a token with inference permissions and optionally write permissions (if you want to deploy your results in Spaces)Build the project

npm run build

- Start it and enjoy with a coffee ☕

npm run start

To make sure everything is correctly setup, you should see this banner on the top-right corner.

Feel free to ask or report issue related to the local usage below 👇

Thank you all!

It would be cool to provide instructions for running this in docker. I tried it yesterday and got it running although it gave an error when trying to use it. I did not look into what was causing it yet though.

Hi

this works great thank you!

WOW

im getting Invalid credentials in Authorization header

not really that familiar with running stuff locally

getting error as "Invalid credentials in Authorization header"

getting error as "Invalid credentials in Authorization header"

Are you sure you did those steps correctly?

- Create a token with inference permissions: https://huggingface.co/settings/tokens/new?ownUserPermissions=repo.content.read&ownUserPermissions=repo.write&ownUserPermissions=inference.serverless.write&tokenType=fineGrained then copy it to your clipboard

- Create a new file named

.envin the Deepsite folder you cloned and paste your token in it so it should look like this:

HF_TOKEN=THE_TOKEN_YOU_JUST_CREATED

- Launch the app again

verified steps, it launches but upon prompt I get the same response Invalid credentials in Authorization header

Hi guys, I gonna take a look at this, will keep you updated

Using with locally running models would be cool too.

I did everything according to the steps above, it worked the first time. Thank you.

P.S..

Updated the node

it has started working for me now - many thanks!

Using with locally running models would be cool too.

I know right

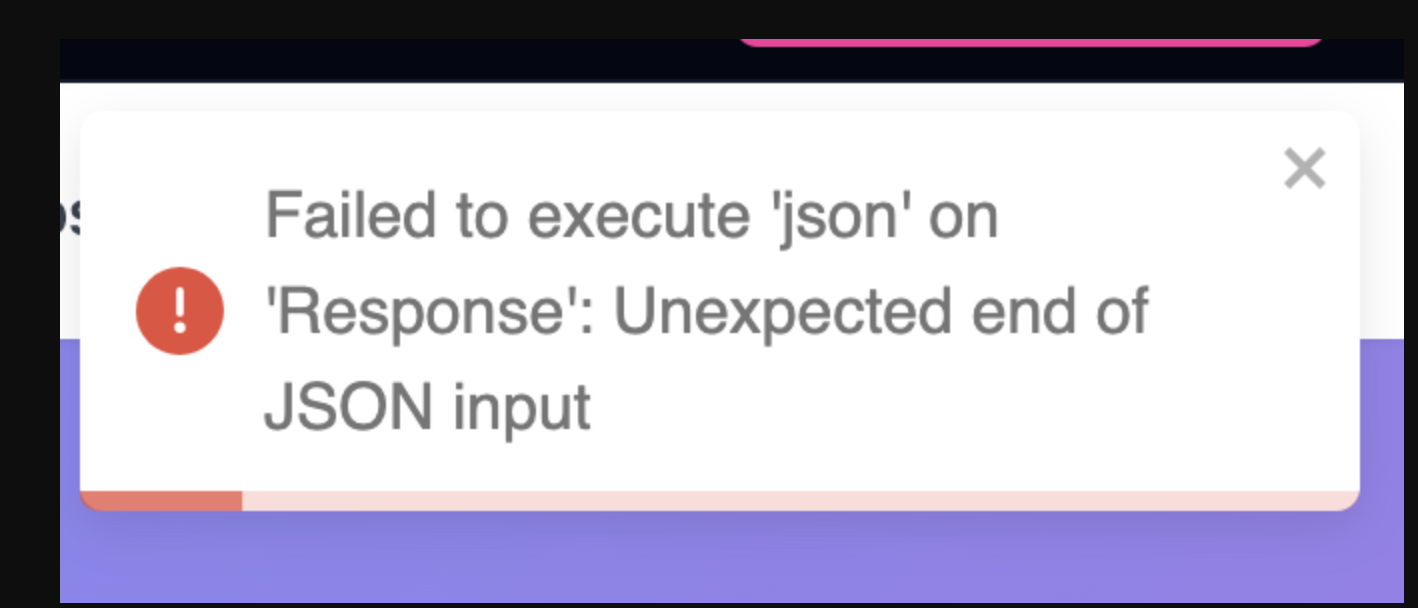

I used the Dockerfile, set the HF_TOKEN and on first try get the error message: We have not been able to find inference provider information for model deepseek-ai/DeepSeek-V3-0324. Error happens in try catch when calling client.chatCompletionStream.

can i point this to my own deepseek API key and run offline using my own API key nothing to do with huggingface?

can i point this to my own deepseek API key and run offline using my own API key nothing to do with huggingface?

I did so after the previous error with the inference provider but always run into the max token output limit and receive a website that suddenly stops. Wondering how the inference provider approach works differently towards this.. i can not explain myself as deepseek is just limited to max 8k output.

can i point this to my own deepseek API key and run offline using my own API key nothing to do with huggingface?

I did so after the previous error with the inference provider but always run into the max token output limit and receive a website that suddenly stops. Wondering how the inference provider approach works differently towards this.. i can not explain myself as deepseek is just limited to max 8k output.

There is models now well over 1Mill so could easily swap. did you run the docker and set API and .env file?

Deepsite uses deepseek (here online) so this was the base of my test... Here online i receive a full website but locally with my direct deepseek api not. Yeah there are some models with much more output. also deepseek coder v2 with 128k .. but still wondering the differences between deepseek platform api and inference provider - makes no sense.

Yes you can use it without being PRO, but you're always concerned about limits (https://huggingface.co/settings/billing)

Thanks.

Can run locally - offline - with OLLAMA server

Hello, I subscribed to the pro option twice and they charged me $10 twice but I still haven't upgraded.

How to add google provider to this project? I want to use gemini 2.5 pro